4 reasons Zoom ‘digital twins’ are a bad idea

4 reasons Zoom ‘digital twins’ are a bad idea

Why IT leaders should not encourage using AI to replace decision-makers in meetings.

The Verge recently interviewed Eric Yuan, the CEO of Zoom, about how Zoom might incorporate AI into the video meeting platform. Some of Yuan’s comments were concerning, and I wanted to comment on them.

The future of AI ‘digital twins’

The interviewer asked about how organizations might use technology to automate or streamline tasks, and Yuan comments how we are embarking on a 2.0 journey

and that Looking back at 1.0, it was more about building some applications; videoconferencing is one of them

and that now, [when] you look at a 2.0, it is different. It’s Work Happy with the Zoom AI Companion and everything really about Workplace, the entire collaboration platform as well as AI.

Yuan continues to explore the future of AI at work, commenting given the AI era, everyone is thinking about how to leverage AI more and more, to make everything fully automated, to reduce manual work, and to reduce human involvement.

Yuan envisions a future where AI can respond to everything and make decisions, saying:

Today for this session [interview], ideally, I do not need to join. I can send a digital version of myself to join so I can go to the beach. Or I do not need to check my emails; the digital version of myself can read most of the emails. Maybe one or two emails will tell me, “Eric, it’s hard for the digital version to reply. Can you do that?” Again, today we all spend a lot of time either making phone calls, joining meetings, sending emails, deleting some spam emails and replying to some text messages, still very busy. How [do we] leverage AI, how do we leverage Zoom Workplace, to fully automate that kind of work? That’s something that is very important for us.

This is an inspiring view of the future with AI as a “work assistant.” We already have some of this; for example, both Gmail and Outlook will suggest quick replies to emails, such as ways to thank someone for positive feedback, or responding to a client after a successful engagement:

However, Yuan also sees a time when AI can replace decision-makers in meetings, making this comment:

For a very important meeting I missed, given I’m the CEO, they’re probably going to postpone the meeting. The reason why is I probably need to make a decision. Given that I’m not there, they cannot move forward, so they have to reschedule. You look at all those problems. Let’s assume AI is there. AI can understand my entire calendar, understand the context.

At the same time, every morning I wake up, an AI will tell me, “Eric, you have five meetings scheduled today. You do not need to join four of the five. You only need to join one. You can send a digital version of yourself.” For the one meeting I join, after the meeting is over, I can get all the summary and send it to the people who couldn’t make it. I can make a better decision. Again, I can leverage the AI as my assistant and give me all kinds of input, just more than myself. That’s the vision.

For the one meeting that Yuan describes, using AI to summarize the meeting is a powerful value-add. The ability to loop in others who weren’t able to attend, and to provide them a reasonable summary of the meeting - that can be extremely valuable.

At the same time, organizations should be concerned about taking human decision-makers out of the loop for the other four meetings Yuan described. Here are several issues for leaders to consider:

1. AI is not really ‘intelligence’

What we call “AI” or “Artificial Intelligence” is really a generative large language model (LLM). These systems work by first training them on a huge data set, and providing scenarios by which the model can develop patterns. The act of generating responses is essentially a statistical model to predict the next word or phrase based on the context of the question and what text the system has already generated.

This is somewhat simplified, but accurately describes how LLMs work at a high level. For more details, you might watch this excellent video that describes how generative text transforms work, as explained by a deepfake Ryan Gosling.

2. AI doesn’t actually understand

LLMs do very well at certain tasks, including summarizing information and translating content. However, they do not truly “understand” the content as a human would. The label intelligence in “artificial intelligence” is misapplied to LLMs.

Yuan’s vision of AI as a “digital twin” to represent a decision-maker in a meeting assumes that AI will evolve to develop true intelligence that understands the content of a discussion and its implications. Yuan explains:

Let’s say the team is waiting for the CEO to make a decision or maybe some meaningful conversation, my digital twin really can represent me and also can be part of the decision making process. … Essentially, that’s the foundation for the digital twin. Then I can count on my digital twin. Sometimes I want to join, so I join. If I do not want to join, I can send a digital twin to join. That’s the future.

Yuan acknowledges that we aren’t there now, but AI will be at this level within “a few years.” This remains to be seen, but I’m not optimistic that just a few years will provide the AI transformation necessary. In the meantime, consider this scenario:

Imagine a daily stand-up meeting, where the team provides a summary of the previous day or week and looks ahead to the next day or week. This is a pretty straightforward meeting, so the CEO might send a digital twin. During the meeting, the team realizes they need a decision, perhaps something typical like “We aren’t going to make the date that we promised at the shareholder event - do we release a statement to the press about the delays, or just keep going?”

If the CEO has set the expectation that the digital twin can make decisions in their place, then whatever response the AI generates will be what the organization goes with. But we’re seeing that AI can produce some wild recommendations, such as putting glue on pizza to make the cheese stick, or drinking urine to pass kidney stones.

In that example, Google claims its AI Overview returned these odd results because of a data void or information gap, made worse by users asking nonsensical questions. But another way to view this is AI doesn't actually understand reality in the same way that humans do, such as whether a question really makes sense, or if the person asking the question is earnest.

3. Liability concerns with AI decisions

Some decisions are best left to humans. But by setting an expectation that the AI can make decisions on behalf of the CEO, organizations open themselves to the consequences of those decisions.

One concerning, but realistic scenario, is one where the AI digital twin makes a decision that affects someone’s job. Maybe it doesn’t have an immediately obvious impact, but the knockdown effects of that decision will later mean a human loses their job. This could open an organization to liability risks if the affected employee brings forward a grievance.

4. Training staff to accept deepfakes as real

By normalizing AI digital twins to represent real decision makers in virtual meetings, CEOs normalize accepting deepfakes as carrying authority. This has real-world organizational security issues.

Imagine a scenario where a financial analyst is called into a virtual meeting with the CIO and CFO. The CEO describes a project the organization is already working on, and how they need to make certain immediate investments in order to drive the project forward. Specifically, the CEO asks the financial analyst to make several large-value money transfers to different accounts, totaling over one million dollars.

However, after the meeting, the financial analyst learns the CEO and CFO were not actually present in the meeting. Instead, they were real-time deepfakes modeled on publicly-available photos of both the CEO and CFO, possibly pulled from the organization’s website.

You don’t have to imagine a scenario like this; it is already reality. Earlier this year, a multinational company was targeted by a deepfake scam that resulted in an employee transferring $25 million to the scammers.

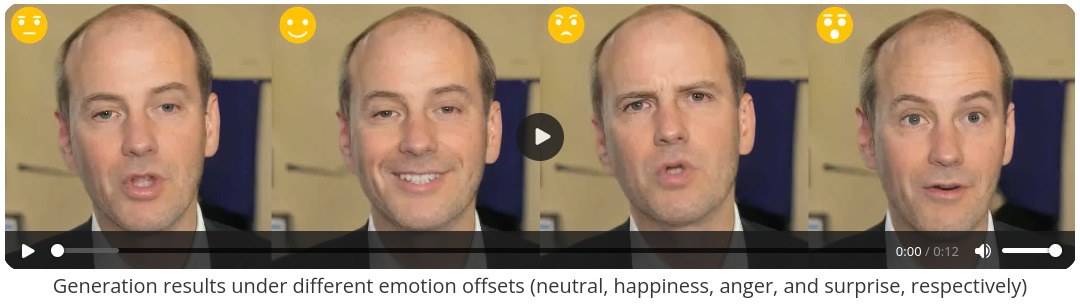

With technology like Microsoft’s VASA-1, it’s possible to generate a realistic digital twin of a real person based on a single photo, with realistic facial expressions and natural head movements. The VASA-1 software can also combine that “digital puppet” with precise lip-audio sync with generated text-to-speech that mimics the other person’s voice. VASA-1 is not available for sale, but others are sure to release software like it soon.

All of this generated in real time, up to 40fps in the online streaming mode with a preceding latency of only 170ms, evaluated on a desktop PC with a single NVIDIA RTX 4090 GPU.

Dell sells such a desktop system for less than $4,000, a tiny investment for a potential payoff that could be worth millions.

Balancing risk with value

Generative AI like ChatGPT and Google’s Gemini, and like some of the AI technology envisioned by Yuan, can be a force multiplier for organizations. Leveraged effectively and with guidance, AI can streamline, automate, or even remove repetitive tasks - freeing staff to do more valuable work. But organizations should be cautious about taking humans out of the decision-making loop altogether.